(→Description) |

(→What is a "gesture" anyway ?) |

||

| Line 9: | Line 9: | ||

=== What is a "gesture" anyway ? === | === What is a "gesture" anyway ? === | ||

| − | A gesture here can be any ''temporal curve'', sampled at relatively low frequency compared to sound. Typically, from | + | A gesture here can be any ''multi-dimensional temporal curve'', sampled at relatively low frequency compared to sound. With the current implementation in Max/MSP the frequency sampling period must be larger then 1 milisecond. Typically, 10-20 milisecond is recommended. There are no upper limit (if you have time...). |

| + | |||

| + | Technically, in Max/MSP the data can be taken from any flow of a list,fro example: | ||

| + | |||

| + | * sound parameters (pitch, amplitude, etc) | ||

| + | * mouse, joystick coordinates | ||

| + | * parameters from video tracking (EyesWeb, Jitter, etc) | ||

| + | * Wiimote | ||

| + | * MIDI | ||

| + | * etc... | ||

| + | * any combination of the above (you said multimodal ?) | ||

=== Documentation === | === Documentation === | ||

Revision as of 19:00, 6 November 2007

Contents

What is "the gesture follower" ?

The gesture follower is a set of Max/MSP modules to perform gesture recognition and following in real-time. It is integrated in the toolbox MnM of the library FTM (see dowload). The general idea behind it is to be able get parameters by comparing a performance with an ensemble of prerecorded examples.

The gesture follower can guess the two following questions:

- which gesture is it ? (if you don't like black and white answers, you can get "greyscale" answers: how close are you from the recorded gestures ? )

- where are we ? (begining, middle or end ...)

What is a "gesture" anyway ?

A gesture here can be any multi-dimensional temporal curve, sampled at relatively low frequency compared to sound. With the current implementation in Max/MSP the frequency sampling period must be larger then 1 milisecond. Typically, 10-20 milisecond is recommended. There are no upper limit (if you have time...).

Technically, in Max/MSP the data can be taken from any flow of a list,fro example:

- sound parameters (pitch, amplitude, etc)

- mouse, joystick coordinates

- parameters from video tracking (EyesWeb, Jitter, etc)

- Wiimote

- MIDI

- etc...

- any combination of the above (you said multimodal ?)

Documentation

Examples

Links

Download

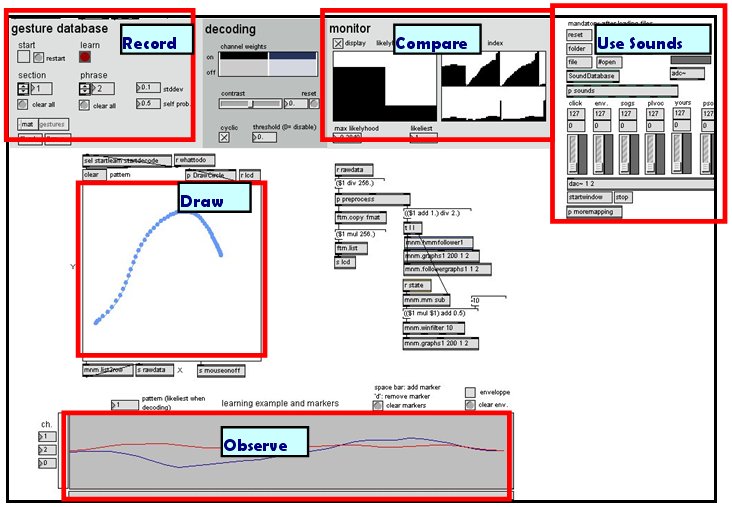

Tutorial Workspace : overview

Get an overview of the interface functions.

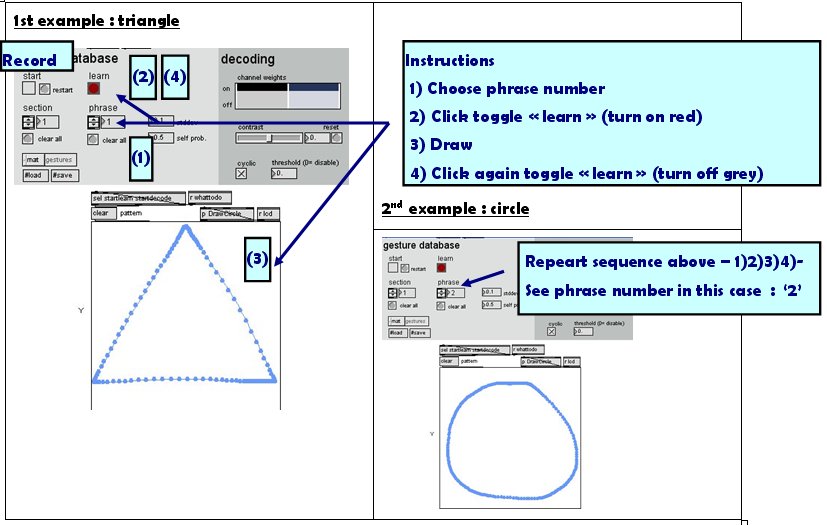

1st STEP : Record gestures

Let’s start with two simple drawings : a triangle and a circle.

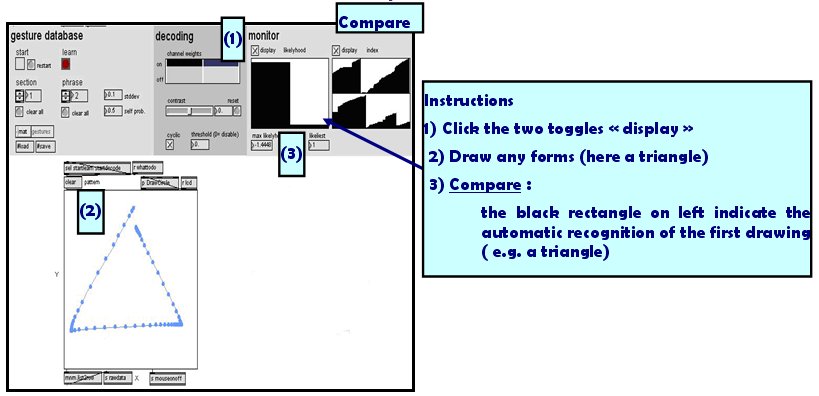

2nd STEP : Compare

Draw a figure and then see how similar it is with your two referent drawings.

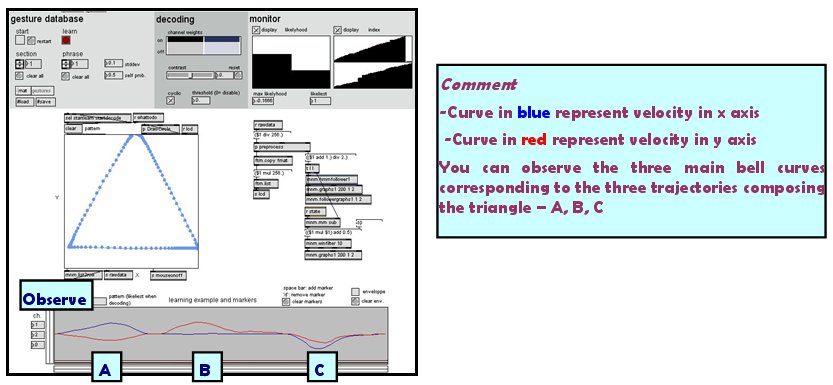

3rd STEP : Observe

Pay attention to the curves below. They represent the velocity in X and Y axis of the mouse trajectories. That give a useful temporal information on how you realize your drawing.

Connection Avec EyesWeb XMI

EyesWeb XMI, the open platform for real-time analysis of multimodal interaction, can be connected to Max/Msp throughout the OSC protocol (Open Sound Control). OSC is open, message-based protocol which was originally developed for communication between computers and sythesizers (cf. wiki).