(→Download, license and referencing) |

(→Credits and Aknowledgements) |

||

| (16 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | + | == What is the ''gesture follower'' ? == | |

The ''gesture follower'' is a ensemble of Max/MSP modules to perform gesture recognition and following in real-time. It is integrated in the toolbox MnM of the library FTM (see dowload). The general idea behind it is to get parameters from the comparison between a performance and an set of prerecorded examples. | The ''gesture follower'' is a ensemble of Max/MSP modules to perform gesture recognition and following in real-time. It is integrated in the toolbox MnM of the library FTM (see dowload). The general idea behind it is to get parameters from the comparison between a performance and an set of prerecorded examples. | ||

| Line 7: | Line 7: | ||

* where are we ? (beginning, middle or end of the gesture) | * where are we ? (beginning, middle or end of the gesture) | ||

| − | + | == What is a ''gesture'' anyway ? == | |

A gesture here can be any ''multi-dimensional temporal curve'', sampled at relatively low frequency compared to sound. With the current implementation in Max/MSP the frequency sampling period must be at least 1 milisecond, but typically, 10-20 milisecond is recommended. There are no upper limit (if you have time...). | A gesture here can be any ''multi-dimensional temporal curve'', sampled at relatively low frequency compared to sound. With the current implementation in Max/MSP the frequency sampling period must be at least 1 milisecond, but typically, 10-20 milisecond is recommended. There are no upper limit (if you have time...). | ||

| Line 23: | Line 23: | ||

* any combination of the above (you said multimodal ?) | * any combination of the above (you said multimodal ?) | ||

| − | + | == Download, license and referencing== | |

| − | The | + | The ''gesture follower'' comes freely with the download of [[http://ftm.ircam.fr/index.php/Download FTM]], in the folder .../MnM.BETA/examples/gesture_follower/. Note that you must have FTM installed. |

| + | |||

| + | The latest version, v0.3 can be downloaded here [http://recherche.ircam.fr/equipes/temps-reel/gesturefollower/gesture_follower_v0.3.zip v0.3] | ||

This software is intended for artistic work and/or scientific research. Any commercial use is reserved. Copyrights 2004-2007 IRCAM - Centre Pompidou. | This software is intended for artistic work and/or scientific research. Any commercial use is reserved. Copyrights 2004-2007 IRCAM - Centre Pompidou. | ||

| Line 31: | Line 33: | ||

F. Bevilacqua, F. Guédy, N. Schnell, E. Fléty, N. Leroy, [http://mediatheque.ircam.fr/articles/textes/Bevilacqua07a/ Wireless sensor interface and gesture-follower for music pedagogy], Proceedings of the International Conference of New Interfaces for Musical Expression (NIME 07), New York,NY, USA, pp 124-12, 2007. | F. Bevilacqua, F. Guédy, N. Schnell, E. Fléty, N. Leroy, [http://mediatheque.ircam.fr/articles/textes/Bevilacqua07a/ Wireless sensor interface and gesture-follower for music pedagogy], Proceedings of the International Conference of New Interfaces for Musical Expression (NIME 07), New York,NY, USA, pp 124-12, 2007. | ||

| − | + | == Getting help and disclaimer == | |

Any type of feedback, problem, bug report, feature request are welcome and we will try our best to help you. | Any type of feedback, problem, bug report, feature request are welcome and we will try our best to help you. | ||

Please post any message/questions directly to the [http://listes.ircam.fr/wws/info/ftm FTM list]. | Please post any message/questions directly to the [http://listes.ircam.fr/wws/info/ftm FTM list]. | ||

| Line 37: | Line 39: | ||

Nevertheless, ...this is work in progress!!! Use this software at your own risk. We do not assume any reponsability for possible problems caused by the use of this software. | Nevertheless, ...this is work in progress!!! Use this software at your own risk. We do not assume any reponsability for possible problems caused by the use of this software. | ||

| − | + | == References== | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | The messages used the various modules are listed here [http://recherche.ircam.fr/equipes/temps-reel/gesturefollower/gesturefollower-reference.01.pdf ref] | ||

| + | == Getting started == | ||

| − | === | + | === LCD example=== |

| − | + | ==== 1st step : Record gestures ==== | |

| − | |||

| − | |||

| − | |||

| − | ==== 1st | ||

Let’s start with two simple drawings : a triangle and a circle. | Let’s start with two simple drawings : a triangle and a circle. | ||

| Line 65: | Line 54: | ||

| − | ==== 2nd | + | ====2nd sep : Compare ==== |

Draw a figure and then see how similar it is with your two referent drawings. | Draw a figure and then see how similar it is with your two referent drawings. | ||

| Line 71: | Line 60: | ||

| − | ==== 3rd | + | ==== 3rd step : Observe ==== |

Pay attention to the curves below. They represent the velocity in X and Y axis of the mouse trajectories. That give a useful temporal information on how you realize your drawing. | Pay attention to the curves below. They represent the velocity in X and Y axis of the mouse trajectories. That give a useful temporal information on how you realize your drawing. | ||

| Line 81: | Line 70: | ||

EyesWeb XMI, the open platform for real-time analysis of multimodal interaction, can be connected to Max/Msp throughout the OSC protocol (Open Sound Control). OSC is open, message-based protocol which was originally developed for communication between computers and sythesizers (cf. wiki). | EyesWeb XMI, the open platform for real-time analysis of multimodal interaction, can be connected to Max/Msp throughout the OSC protocol (Open Sound Control). OSC is open, message-based protocol which was originally developed for communication between computers and sythesizers (cf. wiki). | ||

| + | |||

| + | == Examples == | ||

| + | |||

| + | In the version [http://recherche.ircam.fr/equipes/temps-reel/gesturefollower/gesture_follower_v0.3.zip v0.3], the following examples can be found: | ||

| + | * writing | ||

| + | * Wii | ||

| + | * audio parameters (pitch, periodicity, energy) | ||

| + | * voice (mfcc) | ||

| + | |||

| + | == Links == | ||

| + | |||

| + | == Credits and Aknowledgements== | ||

| + | Real Time Musical Interaction - Ircam - CNRS STMS | ||

| + | |||

| + | |||

| + | Frédéric Bevilacqua, Rémy Muller, Norbert Schnell, Fabrice Guédy, Jean-Philippe Lambert, Aymeric Devergié, Anthony Sypniewski, Bruno Zamborlin, Donald Glowinski (thanks the scree captures!) | ||

Latest revision as of 12:29, 4 November 2008

Contents

What is the gesture follower ?

The gesture follower is a ensemble of Max/MSP modules to perform gesture recognition and following in real-time. It is integrated in the toolbox MnM of the library FTM (see dowload). The general idea behind it is to get parameters from the comparison between a performance and an set of prerecorded examples.

The gesture follower can guess the two following questions:

- which gesture is it ? (if you don't like black and white answers, you can get "greyscale" answers: how close are you from the recorded gestures ? )

- where are we ? (beginning, middle or end of the gesture)

What is a gesture anyway ?

A gesture here can be any multi-dimensional temporal curve, sampled at relatively low frequency compared to sound. With the current implementation in Max/MSP the frequency sampling period must be at least 1 milisecond, but typically, 10-20 milisecond is recommended. There are no upper limit (if you have time...).

There is no technical limit for the dimension of the gesture data (or number of sensor channel) other than what your computer can afford as a CPU load (for example 20 is generally no problem).

In Max/MSP the data feeding the gesture follower can be taken from a list, for example:

- sound parameters (pitch, amplitude, etc)

- mouse, joystick coordinates

- parameters from video tracking (EyesWeb, Jitter, etc)

- Wiimote

- MIDI

- any sensors data, etc...

- any combination of the above (you said multimodal ?)

Download, license and referencing

The gesture follower comes freely with the download of [FTM], in the folder .../MnM.BETA/examples/gesture_follower/. Note that you must have FTM installed.

The latest version, v0.3 can be downloaded here v0.3

This software is intended for artistic work and/or scientific research. Any commercial use is reserved. Copyrights 2004-2007 IRCAM - Centre Pompidou.

If appropriate please cite the Real Time Interaction Team, IRCAM or reference the following article: F. Bevilacqua, F. Guédy, N. Schnell, E. Fléty, N. Leroy, Wireless sensor interface and gesture-follower for music pedagogy, Proceedings of the International Conference of New Interfaces for Musical Expression (NIME 07), New York,NY, USA, pp 124-12, 2007.

Getting help and disclaimer

Any type of feedback, problem, bug report, feature request are welcome and we will try our best to help you. Please post any message/questions directly to the FTM list.

Nevertheless, ...this is work in progress!!! Use this software at your own risk. We do not assume any reponsability for possible problems caused by the use of this software.

References

The messages used the various modules are listed here ref

Getting started

LCD example

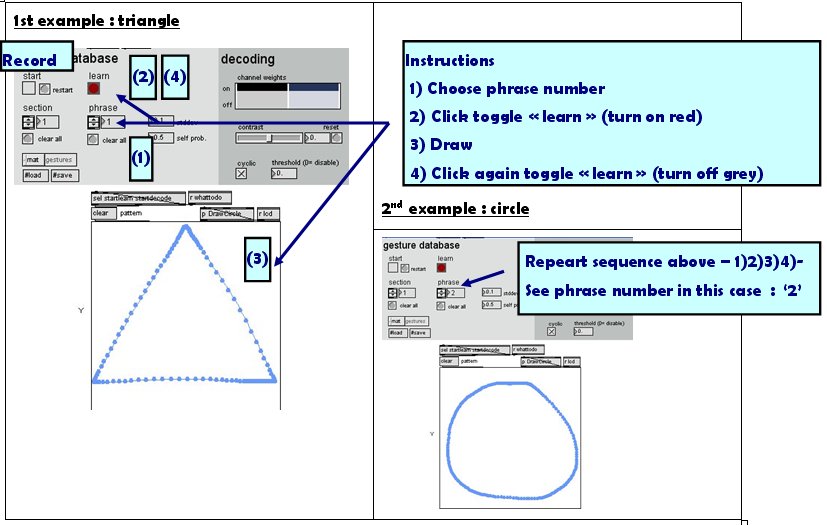

1st step : Record gestures

Let’s start with two simple drawings : a triangle and a circle.

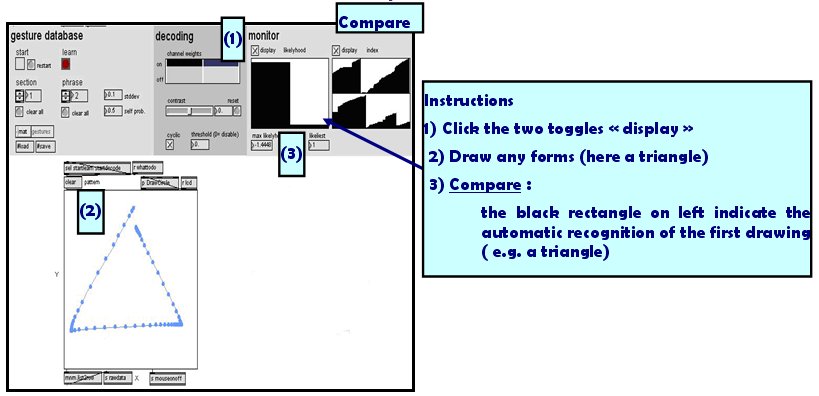

2nd sep : Compare

Draw a figure and then see how similar it is with your two referent drawings.

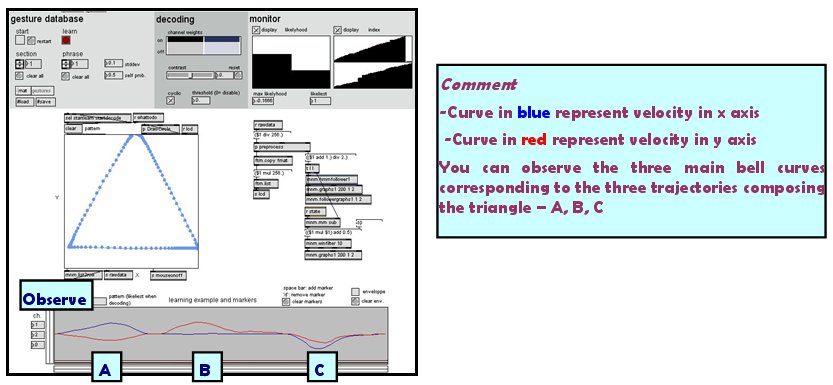

3rd step : Observe

Pay attention to the curves below. They represent the velocity in X and Y axis of the mouse trajectories. That give a useful temporal information on how you realize your drawing.

Connection Avec EyesWeb XMI

EyesWeb XMI, the open platform for real-time analysis of multimodal interaction, can be connected to Max/Msp throughout the OSC protocol (Open Sound Control). OSC is open, message-based protocol which was originally developed for communication between computers and sythesizers (cf. wiki).

Examples

In the version v0.3, the following examples can be found:

- writing

- Wii

- audio parameters (pitch, periodicity, energy)

- voice (mfcc)

Links

Credits and Aknowledgements

Real Time Musical Interaction - Ircam - CNRS STMS

Frédéric Bevilacqua, Rémy Muller, Norbert Schnell, Fabrice Guédy, Jean-Philippe Lambert, Aymeric Devergié, Anthony Sypniewski, Bruno Zamborlin, Donald Glowinski (thanks the scree captures!)